DLRG schedule for spring semester 2025

(GoogleMeet link: https://meet.google.com/qxw-yxyi-pjf )

1) Sunday (Mar. 9, 2025) presented by Rahmeh, “A transformer-based deep learning model for early prediction of lymph node metastasis in locally advanced gastric cancer after neoadjuvant chemotherapy using pretreatment CT images”. url: https://doi.org/10.1016/j.eclinm.2024.102805

2) Sunday (Mar. 23, 2025) presented by Besan, Liyan, Leen and Zaina, “Storynizor: Consistent Story Generation via Inter-Frame Synchronized and Shuffled ID Injection”. url: https://arxiv.org/pdf/2409.19624

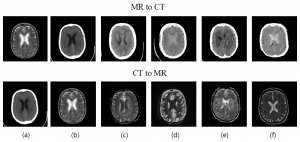

3) Sunday (Apr. 6, 2025) presented by Fatima, “HiFi-Syn: Hierarchical granularity discrimination for high-fidelity synthesis of MR images with structure preservation”. url: https://doi.org/10.1016/j.media.2024.103390

4) Sunday (Apr. 20, 2025) presented by Abdullah, “Ensemble transformer-based multiple instance learning to predict pathological subtypes and tumor mutational burden from histopathological whole slide images of endometrial and colorectal cancer”. url: https://doi.org/10.1016/j.media.2024.103372

5) Sunday (May 4, 2025) presented by Raneem, “Anatomically plausible segmentations: Explicitly preserving topology through prior deformations”. url: https://doi.org/10.1016/j.media.2024.103222

6) Sunday (May 18, 2025) presented by Alaa, “DeepFake-Adapter: Dual-Level Adapter for DeepFake Detection”. url: https://doi.org/10.1007/s11263-024-02274-6

7) Sunday (Jun. 1, 2025) presented by Roua’ and Sara, “Improving cross-domain generalizability of medical image segmentation using uncertainty and shape-aware continual test-time domain adaptation”. url: https://doi.org/10.1016/j.media.2024.103422

(GoogleMeet link: https://meet.google.com/wah-nwrt-pqv)

3) Sunday (Dec. 1, 2024) presented by Besan, Liyan, Leen and Zaina “Zero-shot Generation of Coherent Storybook from Plain Text Story using Diffusion Models”. url: https://arxiv.org/pdf/2302.03900

4) Sunday (Dec. 15, 2024) presented by Israa, “A Survey on Automatic Image Captioning Approaches: Contemporary Trends and Future Perspectives Survey”. doi: 10.1007/s11831-024-10190-8

5) Sunday (Dec. 29, 2024) presented by Rahmeh, “Predicting Choroidal Nevus Transformation to Melanoma Using Machine Learning”. doi: 10.1016/j.xops.2024.100584

6) Sunday (Jan. 12, 2025) presented by Fatima, “DAEGAN: Generative adversarial network based on dual-domain attention-enhanced encoder-decoder for low-dose PET imaging”. doi: 10.1016/j.bspc.2023.105197.

DLRG schedule for spring semester 2024

(GoogleMeet link: https://meet.google.com/wah-nwrt-pqv)

5) Sunday (Apr 28, 2024) presented by Abdel Rahman, “Cheap and Quick: Efficient Vision-Language Instruction Tuning for Large Language Models”. doi: https://arxiv.org/pdf/2305.15023

6) Sunday (May 12, 2024) presented by Alaa, “ISTVT: Interpretable Spatial-Temporal Video Transformer for Deepfake Detection”. doi: 10.1109/TIFS.2023.3239223

DLRG schedule for autumn semester 2023

(GoogleMeet link: https://meet.google.com/wah-nwrt-pqv)

1) Sunday (Nov 5, 2023) presented by Abdel Rahman, “Prompting Large Language Models with Answer Heuristics for Knowledge-based Visual Question Answering”. doi: https://arxiv.org/pdf/2303.01903.pdf

2)Sunday (Nov 19, 2023) presented by Fatima, “ARU-GAN: U-shaped GAN based on Attention and Residual connection for super-resolution reconstruction”. doi: 10.1016/j.compbiomed.2023.107316

3) Sunday (Dec 3, 2023) presented by Israa, “Improved Arabic image captioning model using feature concatenation with pre-trained word embedding ”. doi: 10.1007/s00521-023-08744-1

4) Sunday (Dec 17, 2023) presented by Roua’ and Sara, “Stochastic Segmentation with Conditional Categorical Diffusion Models ”. doi: https://arxiv.org/pdf/2303.08888.pdf

5) Sunday (Dec 31, 2023) presented by Ala, “ResNet-Swish-Dense54: a deep learning approach for deepfakes detection”. doi: 10.1007/s00371-022-02732-7

DLRG schedule for summer semester

(GoogleMeet link: https://meet.google.com/wah-nwrt-pqv)

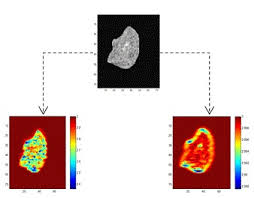

1) Sunday (Jul 23, 2023) presented by Fatima, “ LAC-GAN: Lesion attention conditional GAN for Ultra-widefield image synthesis ”. doi.org/10.1016/j.neunet.2022.11.005

2) Sunday (Jul 30, 2023) presented by Abdel Rahman, “ NoisyTwins: Class-Consistent and Diverse Image Generation through StyleGANs”. https://arxiv.org/pdf/2304.05866.pdf

3) Sunday (Aug 6, 2023) presented by Israa, “ Image-Text Embedding Learning via Visual and Textual Semantic Reasoning”. doi: 10.1109/TPAMI.2022.3148470

4) Sunday (Aug 13, 2023) presented by Roua’ and Sara, “ Segmentation ability map: Interpret deep features for medical image segmentation”. doi.org/10.1016/j.media.2022.102726

5) Sunday (Aug 20, 2023) presented by Bara’a, “ SECS: An Effective CNN Joint Construction Strategy for Breast Cancer Histopathological Image Classification”. doi.org/10.1016/j.jksuci.2023.01.017

Schedule for Spring semester

(GoogleMeet link: https://meet.google.com/wah-nwrt-pqv)

1) Sunday (Mar 12, 2023) presented by Israa, “Image Captioning for Effective Use of Language Models in Knowledge-Based Visual Question Answering”. doi.org/10.1016/j.eswa.2022.118669

2) Sunday (Mar 26, 2023) presented by Ala, “Towards Personalized Federated Learning”. doi.org/10.1109/TNNLS.2022.3160699

3) Sunday (Apr 9, 2023) presented by Fatima : “TCGAN: a transformer-enhanced GAN for PET synthetic CT”. doi: https://doi.org/10.1364/BOE.467683

4) Sunday (Apr 30, 2023) presented by Roua’ and Sara, “A novel deep learning model DDU-net using edge features to enhance brain tumor segmentation on MR images”. doi.org/10.1016/j.artmed.2021.102180

5) Sunday (May 7, 2023) presented by Abdel Rahman, “ViXNet: Vision Transformer with Xception Network for deepfakes based video and image forgery detection. doi.org/10.1016/j.eswa.2022.118423.

6) Sunday (May 21, 2023) presented by Bara’a, “Federated Fusion of Magnified Histopathological Images for Breast Tumor Classification in the Internet of Medical Things”. doing.org/10.1109/JBHI.2023.3256974